Haotian Xue

4th-year PhD (Machine Learning), Georgia Tech. Advised by Yongxin Chen.

About & Research Interests

I do research in machine learning and computer vision. Currently, I am interested in physics AI regarding multi-modal language models, video diffusion model and world models. I am also committed to safety problem in generative AI, focusing on adversarial protection of diffusion model.

I did research at

Adobe Firefly (2025),

Adobe Firefly (2025),

NVIDIA DIR (2024), and

NVIDIA DIR (2024), and

Microsoft Research Asia (2021).

I was also a visiting student at

Microsoft Research Asia (2021).

I was also a visiting student at

MIT CSAIL (2021).

I earned my B.E. in Computer Science (Honors) from

MIT CSAIL (2021).

I earned my B.E. in Computer Science (Honors) from

Shanghai Jiao Tong University (Zhiyuan Honor Program) in 2022.

Shanghai Jiao Tong University (Zhiyuan Honor Program) in 2022.

News

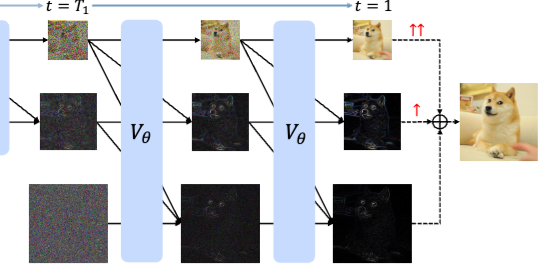

- 2026-01 LapFlow is accepted to ICLR 2026. We propose a laplacian multi-scale flow matching for generation.

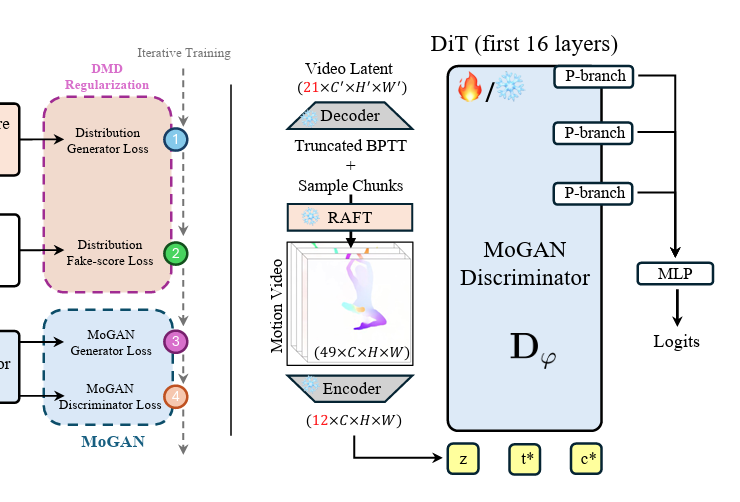

- 2025-10 We propose MoGAN, a novel post-training to improve motion quality for few-step video diffusion models.

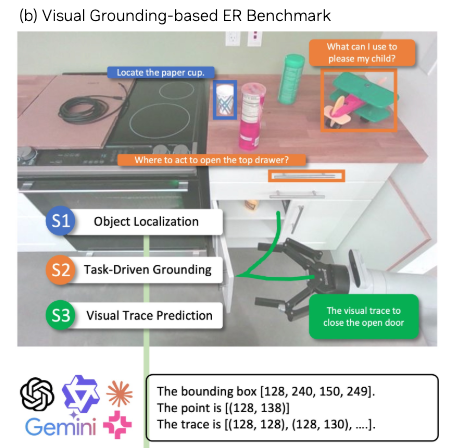

- 2025-09 We propose PIO-Bench, a visual-grounding-centric benchmark for embodied reasoning of VLMs.

- 2025-04 Joining

Adobe Firefly (Summer+Fall) to work on post-training for video diffusion.

Adobe Firefly (Summer+Fall) to work on post-training for video diffusion. - 2024-10 NeurIPS 2024 Scholar Award — see you in Vancouver!

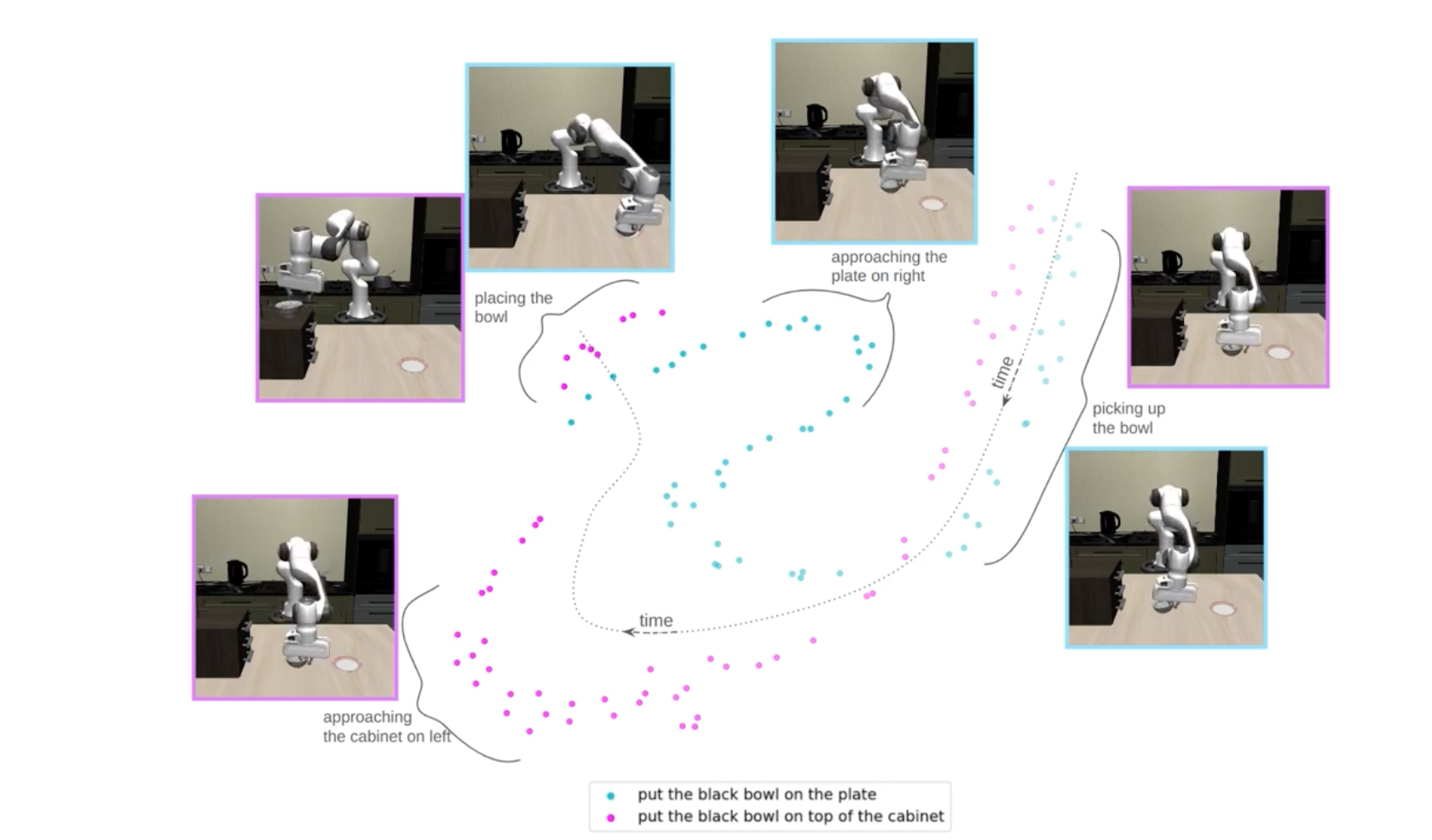

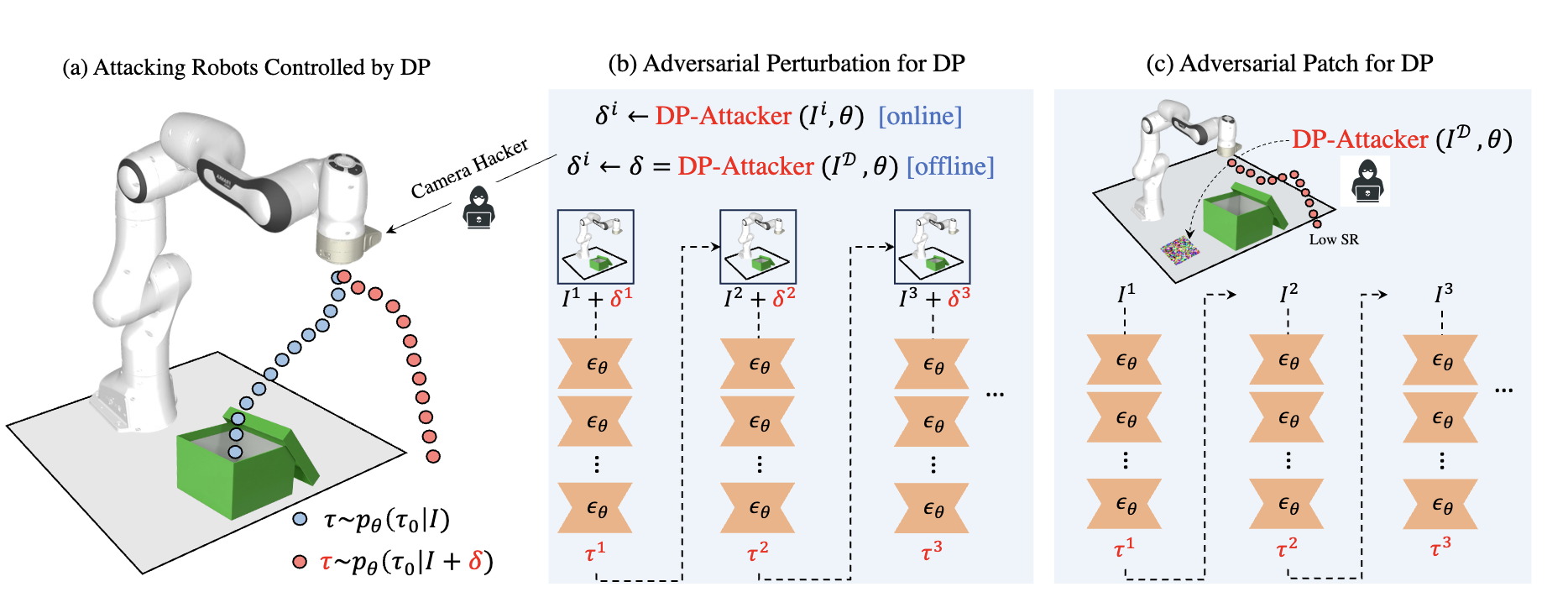

- 2024-09 Three papers accepted to NeurIPS 2024: DP-Attacker, RefDrop, QueST.

- 2024-05 Started summer research intern at

NVIDIA DIR Group.

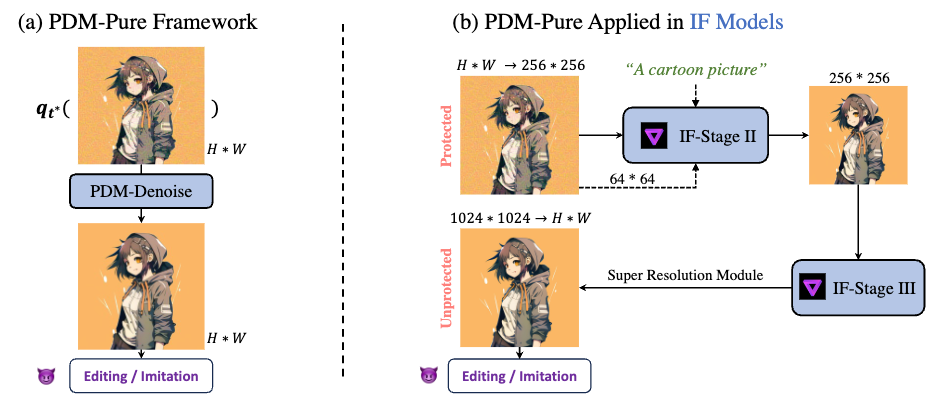

NVIDIA DIR Group. - 2024-04 Released PDM-Pure, a universal purifier against diffusion models.

- 2024-03 ICLR 2024 Travel Award.

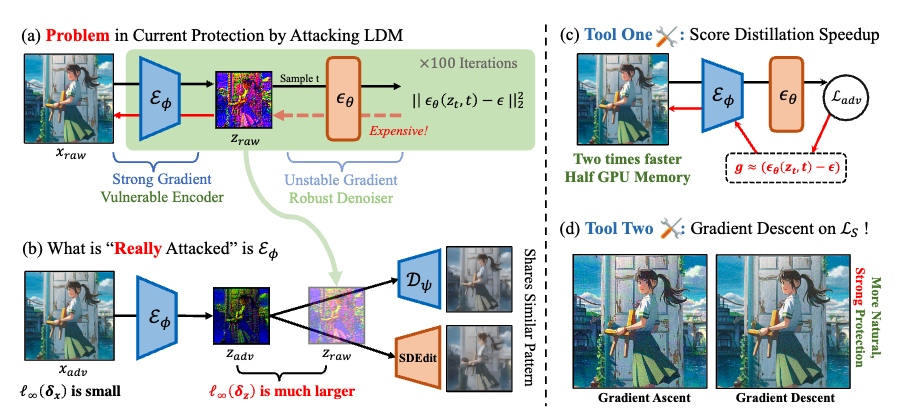

- 2024-01 SDS-Attack accepted to ICLR 2024.

- 2023-10 NeurIPS 2023 Scholar Award; invited reviewer for TPAMI.

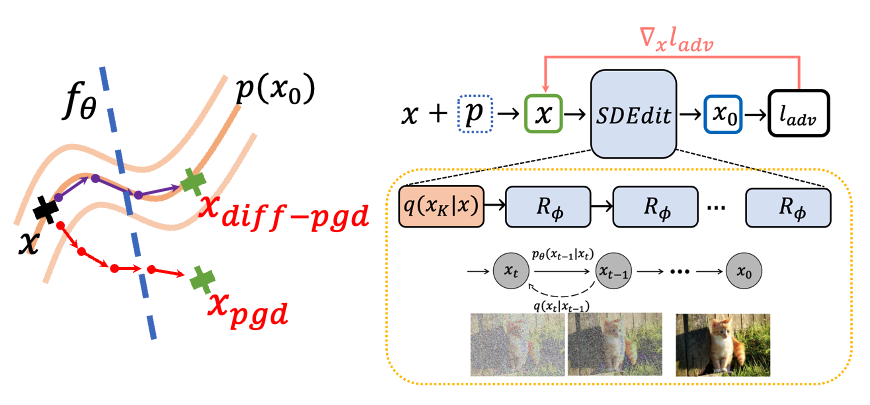

- 2023-09 Diff-PGD and 3D-IntPhys accepted to NeurIPS 2023.

- 2023-08 Invited reviewer for ICLR 2024.

- 2023-05 Proposed Diff-PGD, a diffusion-based adversarial sample framework.

- 2022-12 Selected as a Top Reviewer of NeurIPS 2022.

- 2022-10 Distance-Transformer accepted to EMNLP 2022 Findings.

- 2022-08 Started PhD at ML@GT.

Selected Publications

More & older publications

See Google Scholar for the full, latest list.

Reviewer Experience

- NeurIPS

- ICLR

- ICML

- AISTATS

- CVPR

- AAAI

- TPAMI

- TCSVT